Welcome to my blog. It’s a catch-all and a sandbox of writings about this and that. But mostly, it’s a place to put things that I’d probably lose track of without it.

Posts

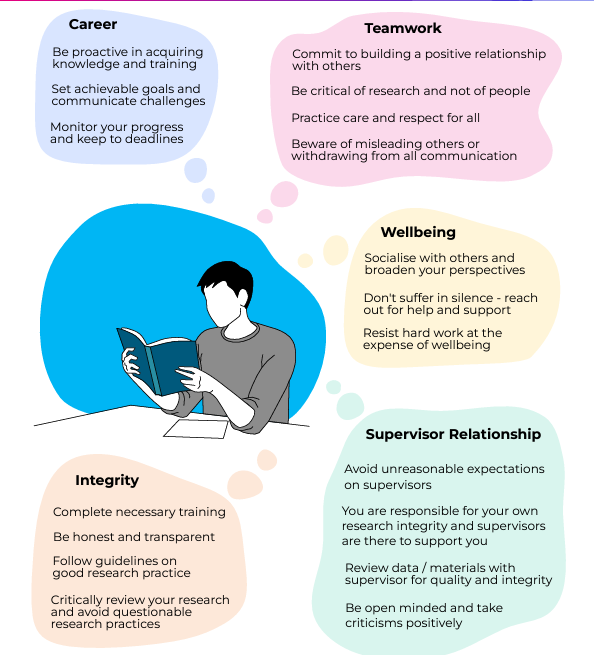

A conversation about open science

open science

psychological science

reproducibility

Quarto

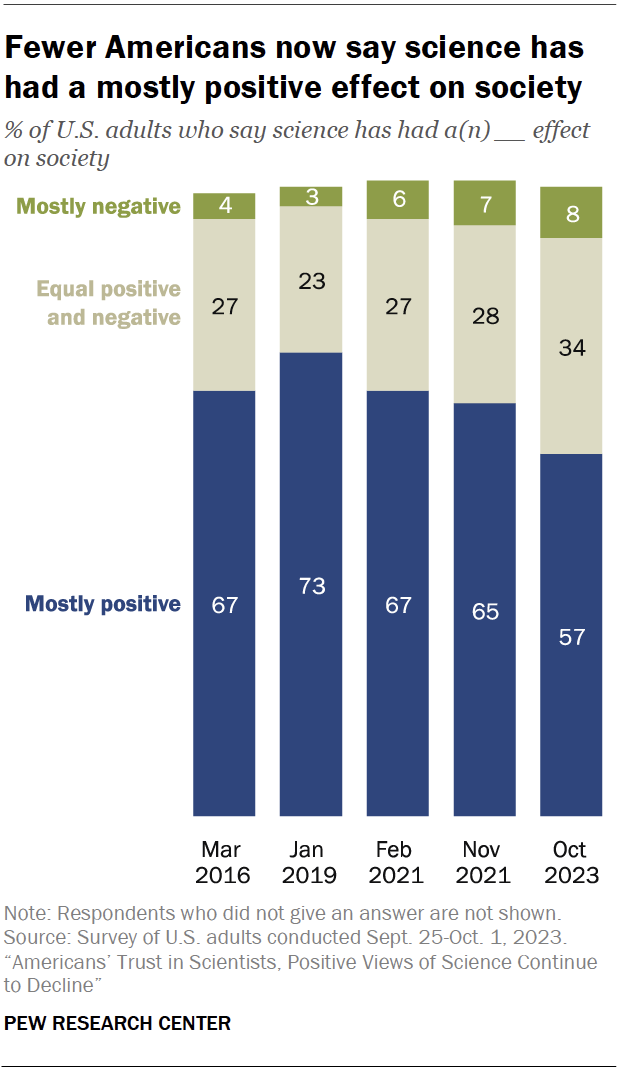

trust in science

Automation…is the way

R

GitHub

Paperpile

visual acuity project

research

Cool Paperpile/GitHub integration

GitHub

Paperpile

visual acuity project

automation

Please release me

R

Python

databraryr

Databrary

software

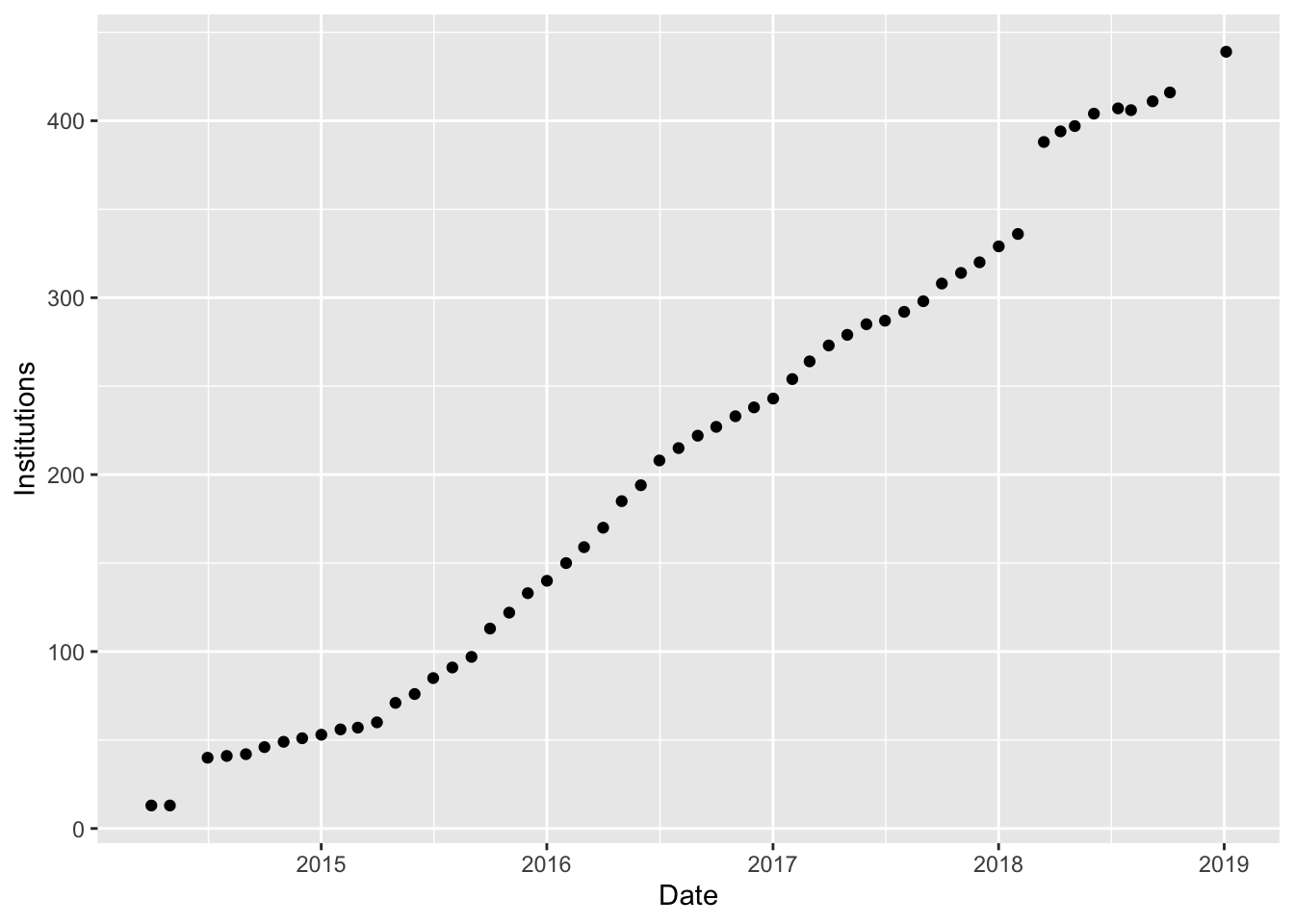

Delivering the package

Databrary

databraryr

R

reproducibility

open science

software

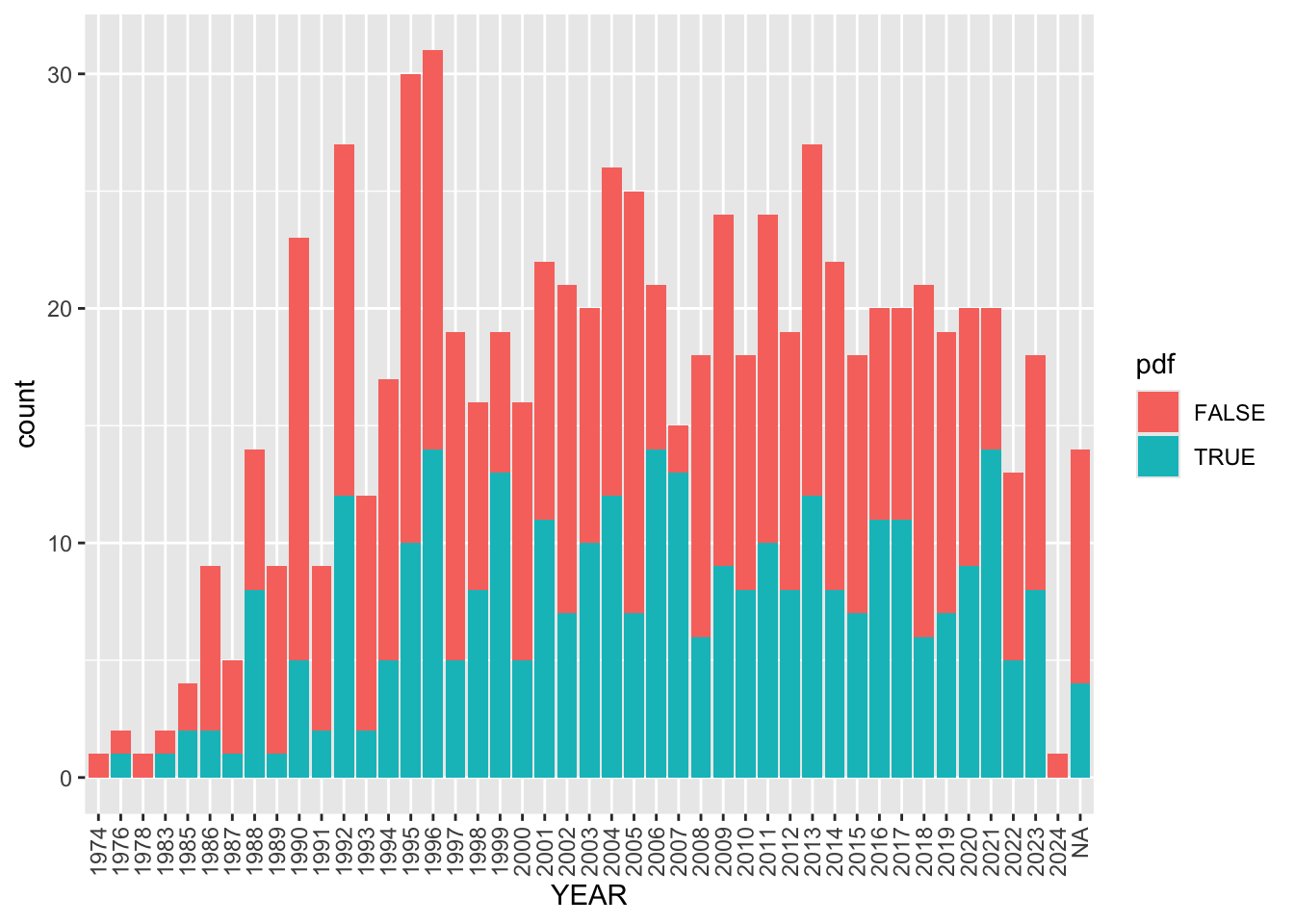

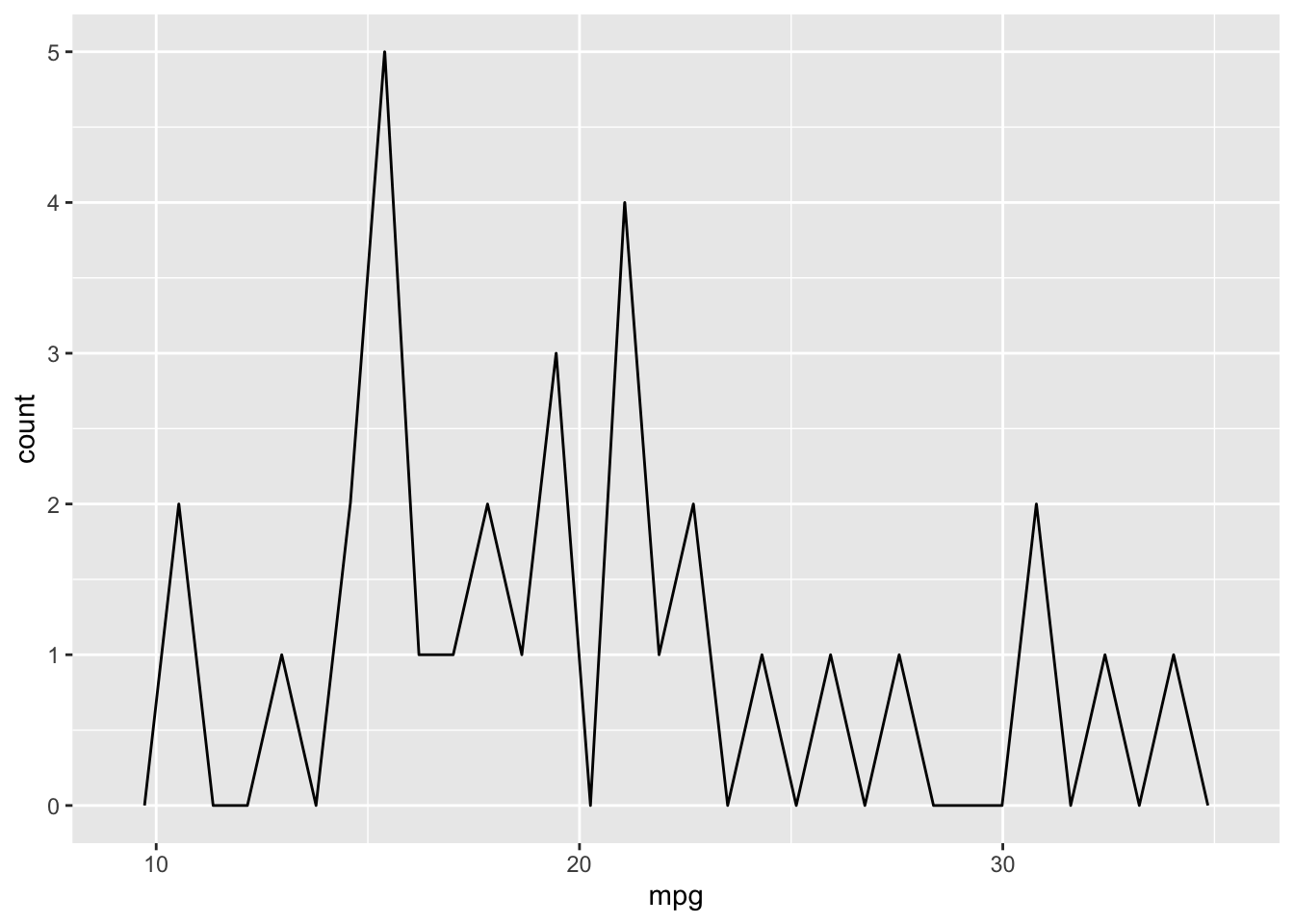

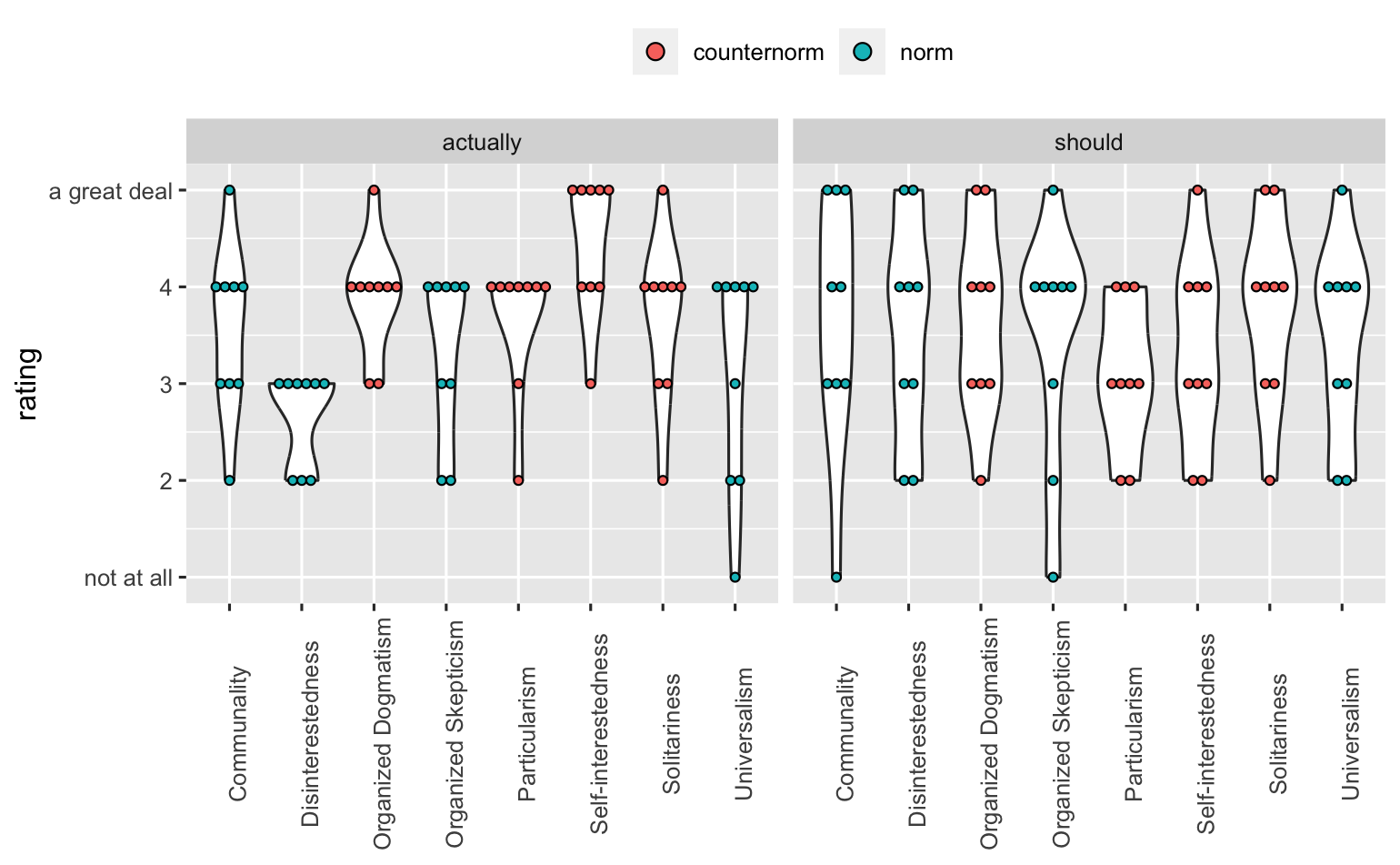

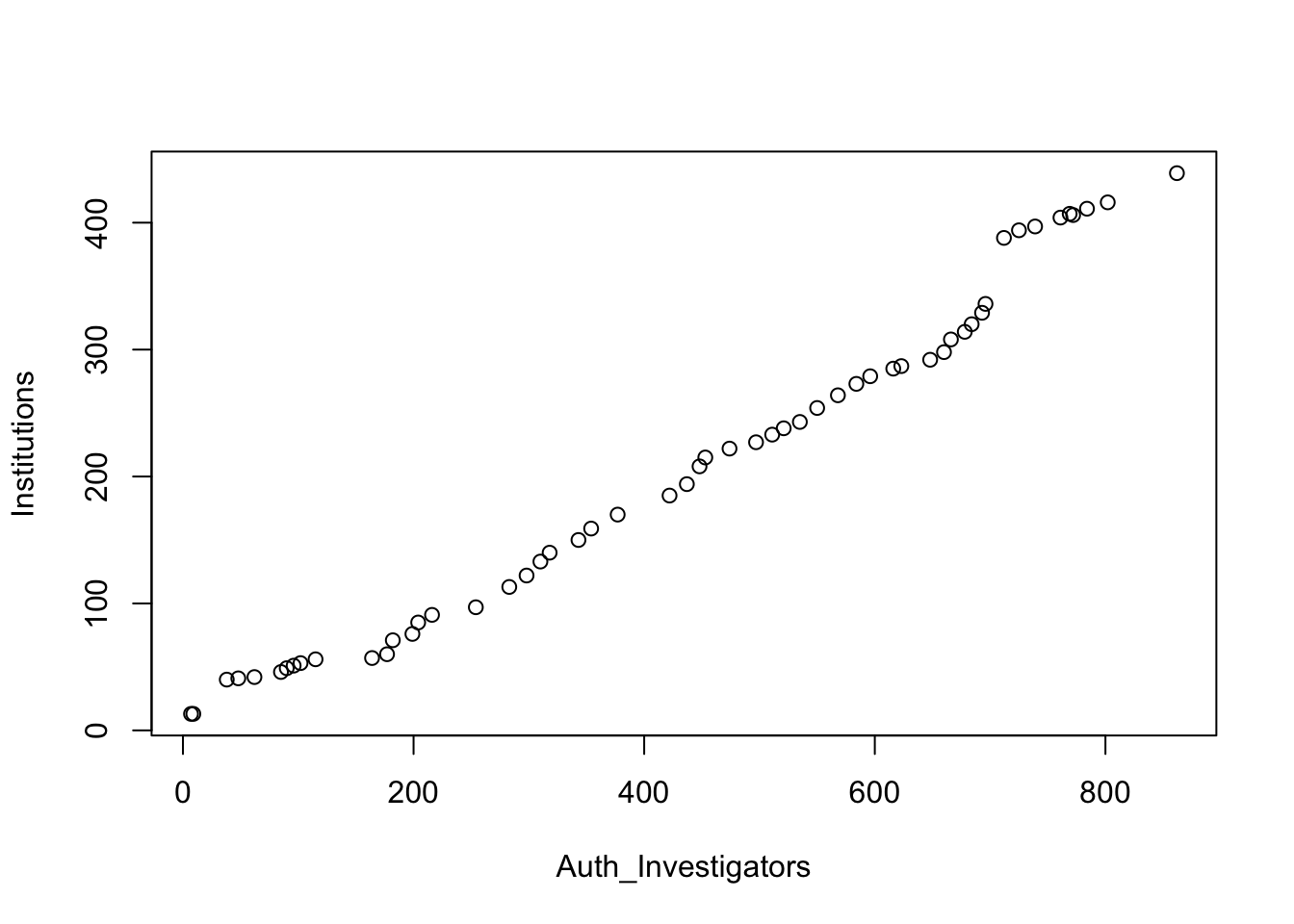

The plot thickens

R

ggplot2

PLAY

KoBoToolbox

visualization

bookdown

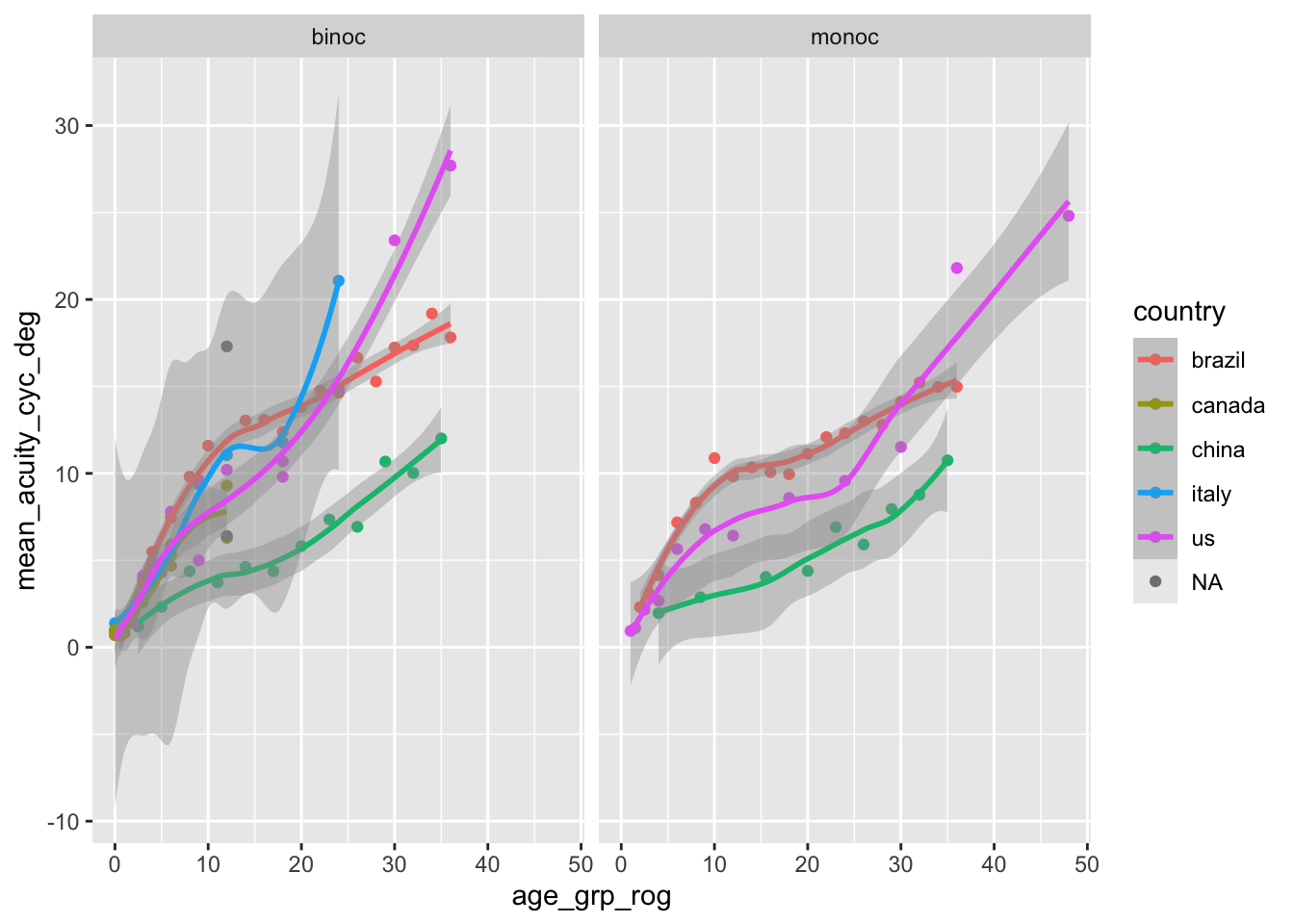

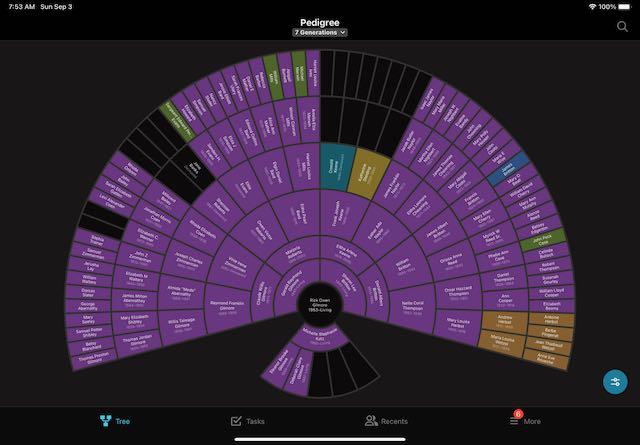

Legacy Project: Visual Acuity

vision

visual acuity project

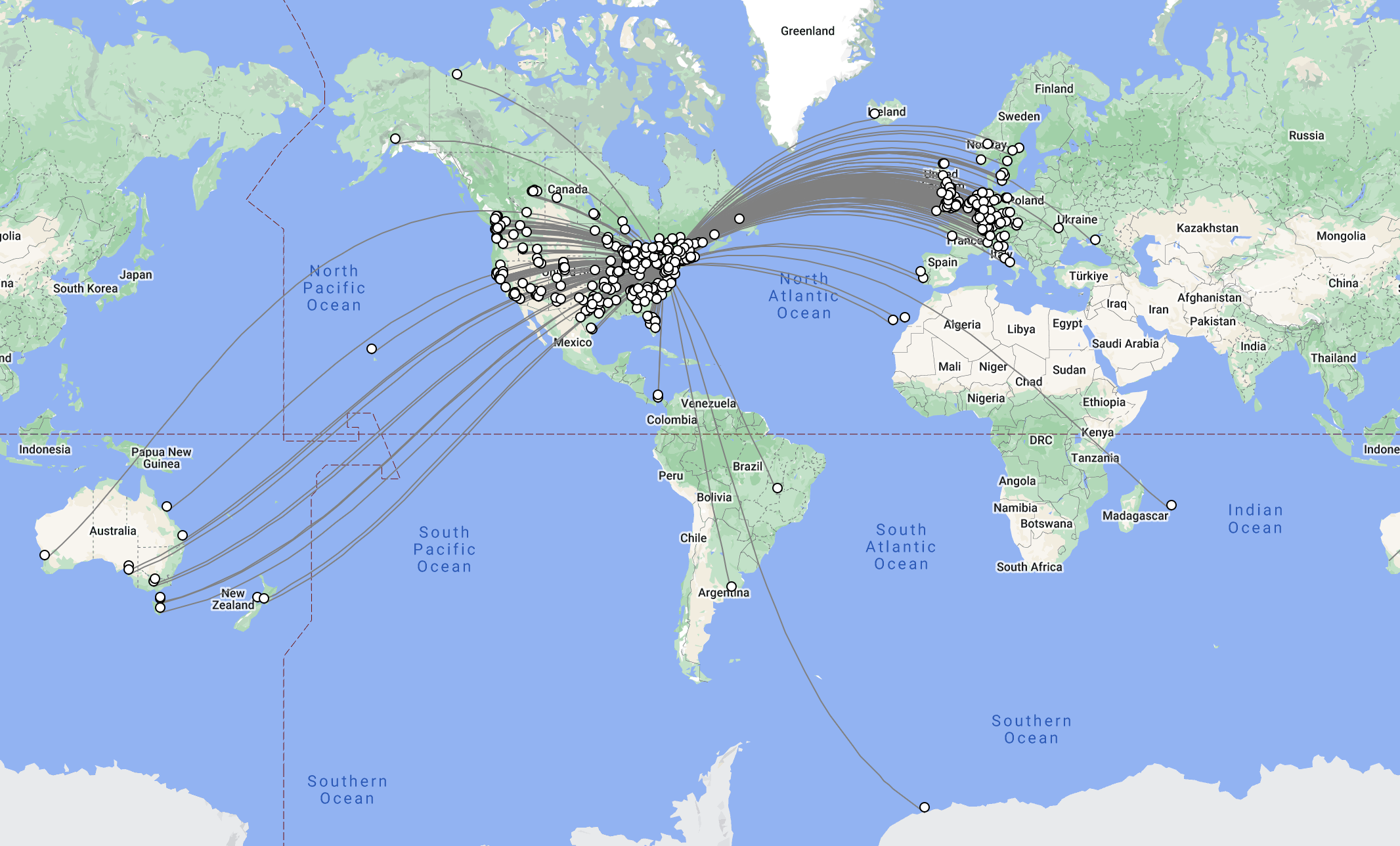

meta-analysis

research

No foolin’

talks

open science

reproducibility crisis

quarto

Devilish details

open science

ethics

data sharing

pre-registration

In search of the ethogram

science

ethogram

behavior

ontology

behaviorome

No matching items